Typhoon Virtual Environment

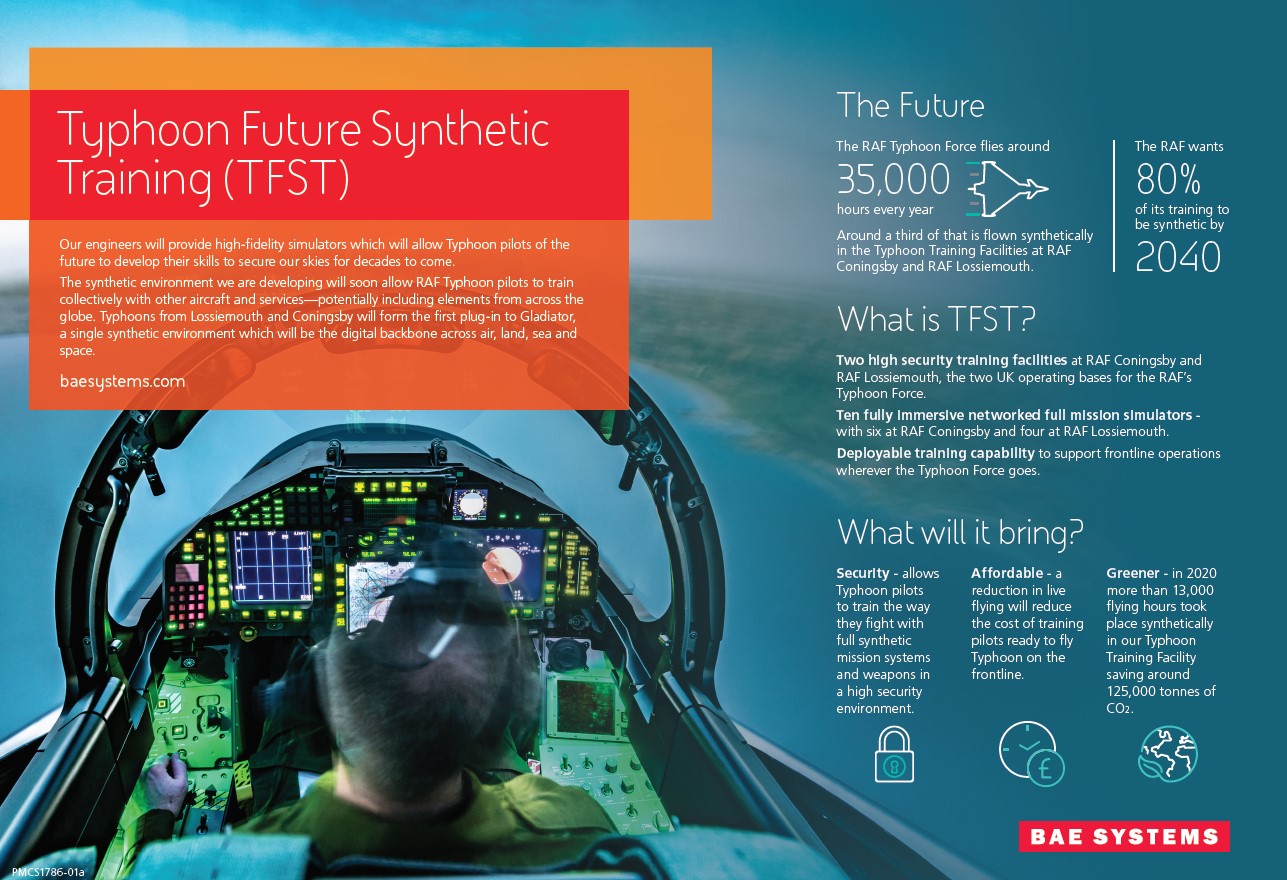

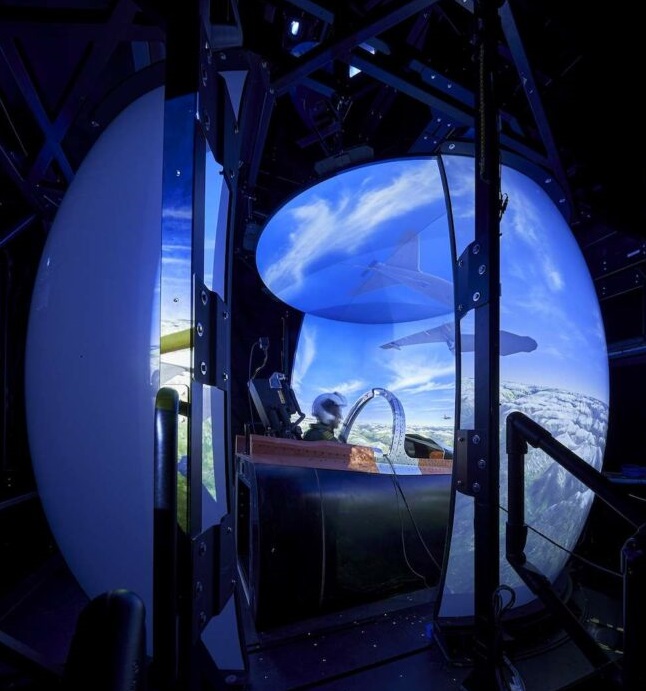

During my time at BAE Systems, I was involved in the development of their new Typhoon Training Simulator. The Typhoon Virtual Environment (TVE) was the new iteration of their simulators that the team has been making for years. I joined the team at the very beginning of the life cycle, so everyone was on sort of an equal playing field which helped me get to know the work and start on the steep curve of learning all the things that the Typhoon can do and what we will need to simulate!

TVE was a step-up from the previous simulator as the Typhoon simulation uses re-hosted software that runs on the jet. This means that any reactions that would happen on the jet, happen in the sim as well in the same way. This gives some challenges as the sensors and systems on the jet are all using real world, analog data as an input. The ultimate goal of the simulator is to allow the Pilot to walk down a corridor and either turn left to the runway or right to the simulator and have the same experience.

Synthetic Environment Team

I was apart of the SE team which worked on all the aspects of the simulation that wasn't the Typhoon Jet. This involves everything from terrain, weather and explosions to audio, enemies and visuals. We use a combination of in house software and commercial off the shelf products (COTS) to achieve a rich environment and aim to have interoperability with other simulators by using HLA which is a network standard for distributed simulation.

The main backbone of the environment is a product called VR-Forces which provides a framework for us to load all of our data. From there we build our tools, services and applications to add things to the battlespace. When I first joined the team, I was tasked with becoming the Subject Matter Expert (SME) for VR-Forces. I would need to become familiar with the tool, how to use all of the features that are applicable to us and also extend some of the functionality to add things that were missing from the software that is important to the simulation. I got to grips with how the application handles it's entities and I drew parallels with game engines and programming that I had done in the past. Essentially, it is a component based application which allows you to attach tasks to entities and have those tasks play out during run-time. These tasks can be added to and strung together to make more complex behaviours in plans.

Once the project was fully underway, I showed interest in leading a core component of the simulation. The Audio. With my background in music and audio technology, I was assigned to be the owner of the audio system and all of it components within the simulator managing, processing and creating all of the sound that occurred. If it made a noise, it was my job to make sure that happened.

Audio System

The main component of the audio system is from a company called ASTi. Their product, Telestra, allows you to model and simulate sounds and communication devices such as radios. Radio communication is a big part of warfare and as such is a big part of simulation also. Using the Telestra solution, I was able to create realistic communication channels that matched the real life Typhoon's radio and communication capability, reacting to the environmental conditions and terrain obstructions.

Sound effects are a big part of achieving any level of immersion. Our relationship with sound as humans is a very close, but often misunderstood one. We don't often realise just how much sound affects our perception of something until it is wrong, but even then it is difficult for people to explain what or why it is wrong. This was one of the biggest challenges I faces when it came to creating the sound effects for the simulator. Unlike warning tones or aural cues where there is a audio file that can be referenced or played, simulating engines, missiles or other subtle aspects of a jet in flight can be quite subjective and being a secretive business, getting references is mostly impossible. I decided that to get an objective sound, I would need to get an average of multiple subjective opinions from experts (pilots that have flown and experienced these sounds). Trying to please everyone's opinion isn't easy if at all possible, but trying this scientific approach means that I can get as close to an objective sound as possible with the restrictions in place.

Instructor Communications

The secondary aspect of the training sim is the instructor station where an instructor pilot has full control over the battle theater. They can control any AI wingmen or enemies, weather conditions, aircraft faults and also see any and all of the jet's telemetry so they can help the pilot in the seat if needs be.

In the TVE simulator, the Instructor Operating Station is in a completely different room, so communication systems are more important they they have ever been in a BAE produced simulator. In comes, the Comms Panel!

The Comms Panel is a touch screen application made in C# and WPF that gives an instructor controls of his Radios, Intercoms and Volumes. It is designed to be the instructors view into the communication settings for both himself and the pilot he is instructing. As the Typhoon has multiple communication systems each with multiple settings that can be switched to at any time, the Comms Panel needed to be both easy to understand and navigate while also displaying all of the available comms settings be they active or just loaded in ready to be used later. Leveraging designed from the previous comms solutions and also industry leading online communication software (Discord, teamspeak, etc) I was able to design the Comms Panel from scratch to give ultimate flexibility, speed in using the features and feedback for when the communication-scape gets busy like it would in a real Radio Frequency situation with both Military and Civil communication traffic.

Again, one of the learning curves I had to overcome was the all standards and deeply pedigreed terms and technologies for real life radio communication. Communication over radio waves has been around for over 100 years and there is a lot to get familiar with when it comes to simulating it all. Especially what is technically needed and not needed in a simulation of radio frequencies.

Future capability additions to the communication simulation include things such as wave propagation, calculating atmospherics and other things that attribute to the decay of radio signal over distance. Another addition is that to the role playing side of the simulation. Sometimes, a single instructor is managing multiple aspects of a mission that, in real life would take multiple people to achieve (Air traffic controllers, tanker pilots, other air crew). To add another layer of immersion, a speech synthesis package can be made to hook into the communication system to allow for realistic voices to be heard over the radio generated by either a text to speech component or a speech recognition component. This would allow for masking of the instructors voice and multiple different opportunities for complex, multi-manned components to missions.

In Media

Unfortunately, I can't go into any more detail as you can expect with a military project. That said, here are some links to the project appearing in new and media to hopefully give some more general information.

Hyper Realism in Simulator Training

BAE Wins Typhoon Training Bid

£220 Million Contract

Platform: Windows

Languages/Technology: C#, WPF, ASTi Telestra